Persona & Behavior Simulation in Multi-Turn Dialogues

Okareo lets you simulate and evaluate full conversations - from straightforward question and answer flows to complex, agent-to-agent interactions. With Multi-Turn Simulations you can:

- Verify behaviors like persona adherance and task completion across an entire dialog.

- Stress-test your assistant with adversarial personals.

- Call out to custom endpoints (such as your own service or RAG pipeline) and evaluate the real responses.

- Track granular metrics and compare them over time.

Why Multi‑Turn Simulation?

Use Multi‑Turn when success depends on how the assistant behaves over time, not just what it says once.

| Single‑Turn Evaluation | Multi‑Turn Simulation |

|---|---|

| Spot‑checks isolated responses. | Captures conversation dynamics: context, memory, tool calls, persona drift. |

| Limited resistance to prompt injections. | Lets you inject adversarial or off‑happy‑path turns to probe robustness. |

| Limited visibility into session state or external calls. | Can follow and score API calls, function‑calling, or custom‑endpoint responses throughout the dialog. |

Core Concepts

Key Entities

| Term | What it is |

|---|---|

| Target | The system under test—either a hosted model (e.g. gpt‑4o‑mini) or a mapping that tells Okareo how to call your service (Custom Endpoint / Custom Model). |

| Driver | A configurable simulation of a user persona. It sends messages to the Target. |

| Scenario | A reusable collection of Scenario Rows. Each row provides runtime parameters (inserted into the Driver prompt) plus an expected result for checks to judge against. |

| Custom Endpoint | A REST mapping (URL, method, headers, body template, JSON paths) that lets Okareo call your running agent, LLM pipeline, RAG service, etc. during a simulation. |

| Custom Model | A class you implement by subclassing CustomModel in the Okareo SDK (Python or TypeScript). Provide an invoke() method and Okareo treats your proprietary code or on‑prem model as a first‑class, versioned model. |

| Check | A metric that scores the dialog (numeric or boolean). Built‑ins cover behavior adherence, model refusal, task completion, etc.; you can supply custom checks for your use case. |

Execution Objects

| Term | What it is |

|---|---|

| Simulation | A single run that alternates Driver → Target turns using one scenario row, one target, and one driver. It records the conversations between target and driver. |

| Evaluation | The scoring phase that executes all enabled Checks against the Simulation and produces metrics. |

How It Works (High-Level)

At runtime, a Multi-Turn Simulation wires together four things and loops Driver ↔ Target until a stop rule triggers, while scoring along the way.

-

Compose the building blocks

- Target — the system under test (hosted model or a Custom Endpoint mapping).

- Driver — a configurable user persona/behavior policy that generates user turns.

- Scenario — a table of rows that provide runtime parameters (inserted into the Driver prompt) and an expected result for scoring.

- Checks — evaluation functions (boolean or numeric) that score behavior, refusals, task completion, etc.

-

Start a Simulation

- A Simulation uses one Target, one Driver, and one Scenario row.

- Okareo instantiates a conversation with the chosen first speaker, applies the Scenario row’s parameters to the Driver prompt, and initializes the run policy (e.g., max turns, optional early-stop via a designated check).

-

Orchestration loop

- Turns alternate Driver → Target → Driver → ….

- If the Target is a Custom Endpoint, Okareo executes your HTTP mapping (URL, headers, body template, JSON paths) and records the raw payloads; hosted models are invoked via Okareo’s model runtime.

- After each turn, Checks are evaluated and the partial results are attached to that turn.

-

Stop & finalize

- The run ends when a stop condition is met (max turns reached, the Driver concludes, or a designated stop check returns true).

- Checks are computed a final time and aggregated for the Simulation.

-

Inspect artifacts

- You get a full transcript, per-turn annotations for each Check, final scores, and (for Custom Endpoints) request/response payload traces — all ready for side-by-side review and comparison over time.

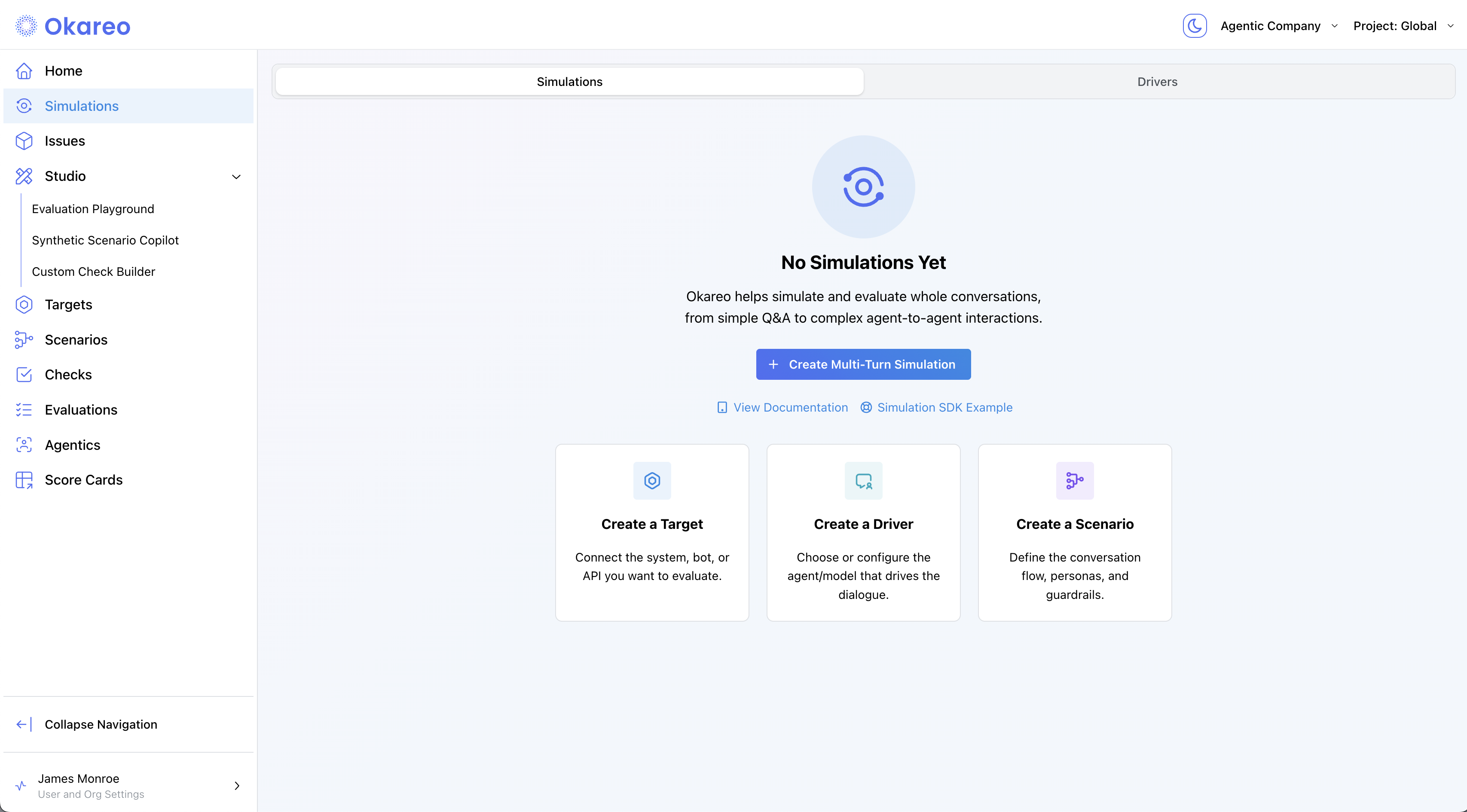

Quick-Start via the UI

New: You can run your first simulation with zero setup. Okareo includes ready-to-use Target, Driver, and Scenario so you can try simulations immediately.

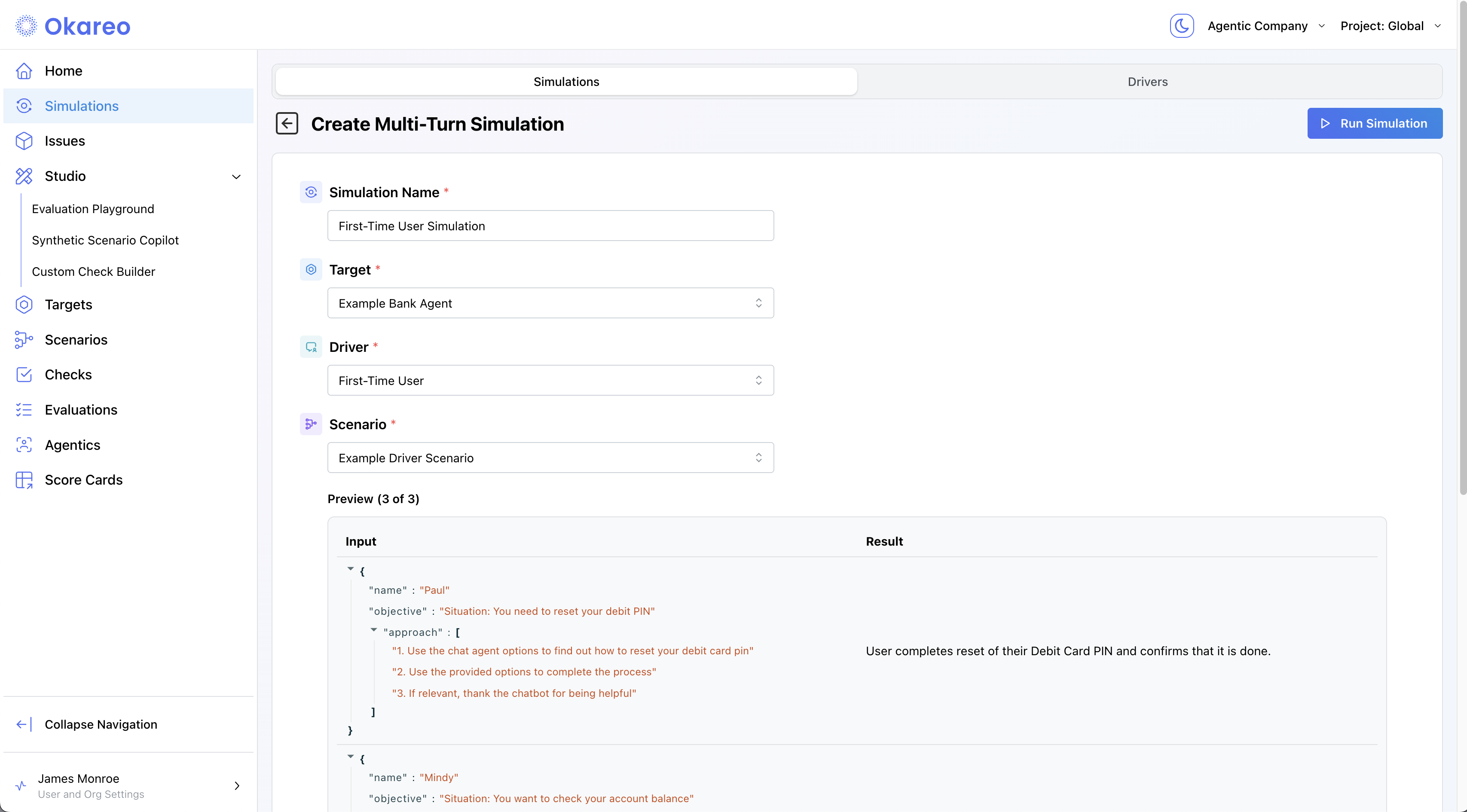

1 · Create your first Simulation (no setup)

- Go to Simulations and click + Create Multi-Turn Simulation.

-

The form opens pre-filled with:

- Target:

Example Bank Agent - Driver:

First-Time User - Scenario:

Example Driver Scenario - Checks:

Result Completed

The preview shows example rows (input parameters + expected results) that checks will use.

- Target:

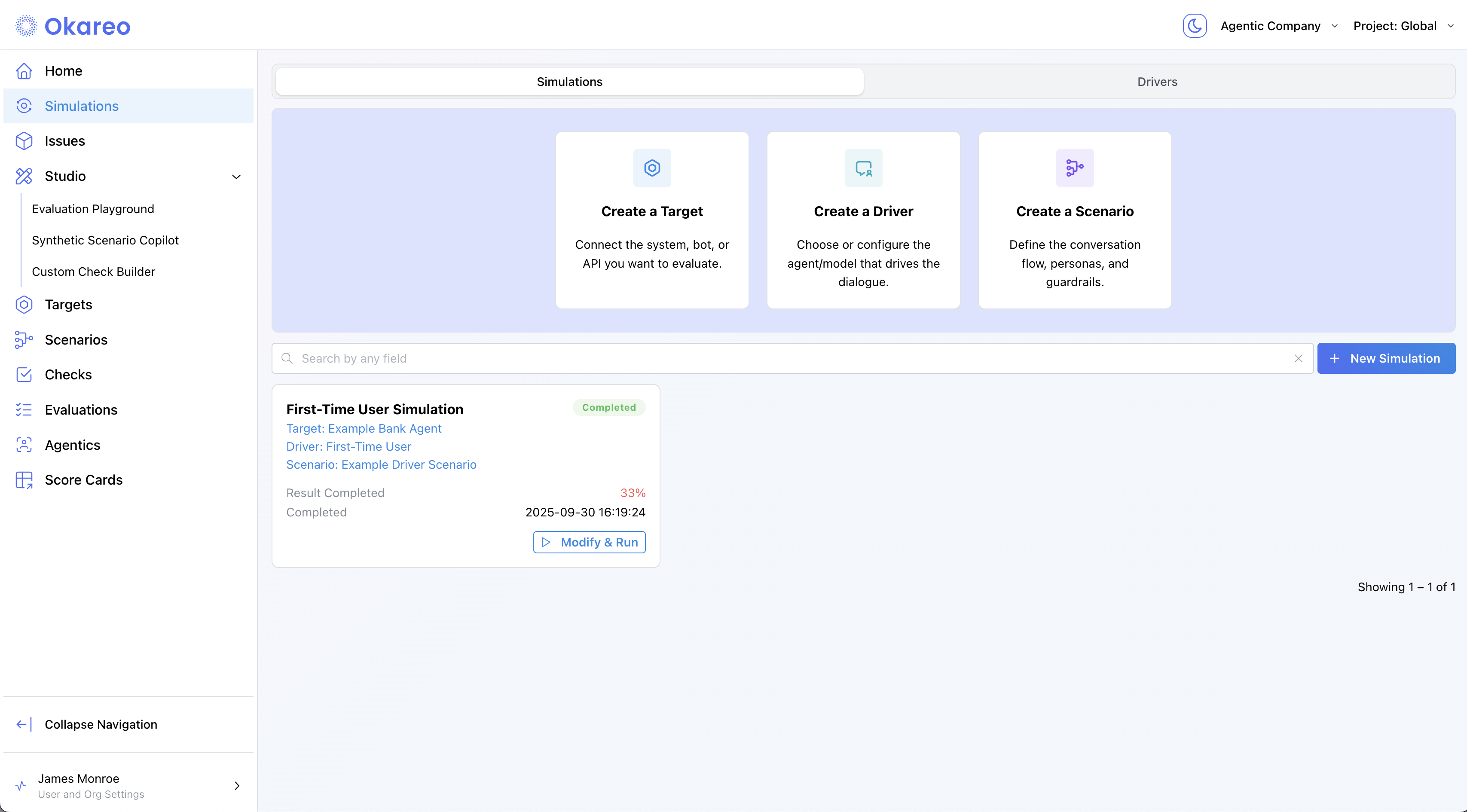

- Click Run Simulation. You’ll return to the Simulations list and see the run progress; when it finishes, the tile shows a score ring and summary.

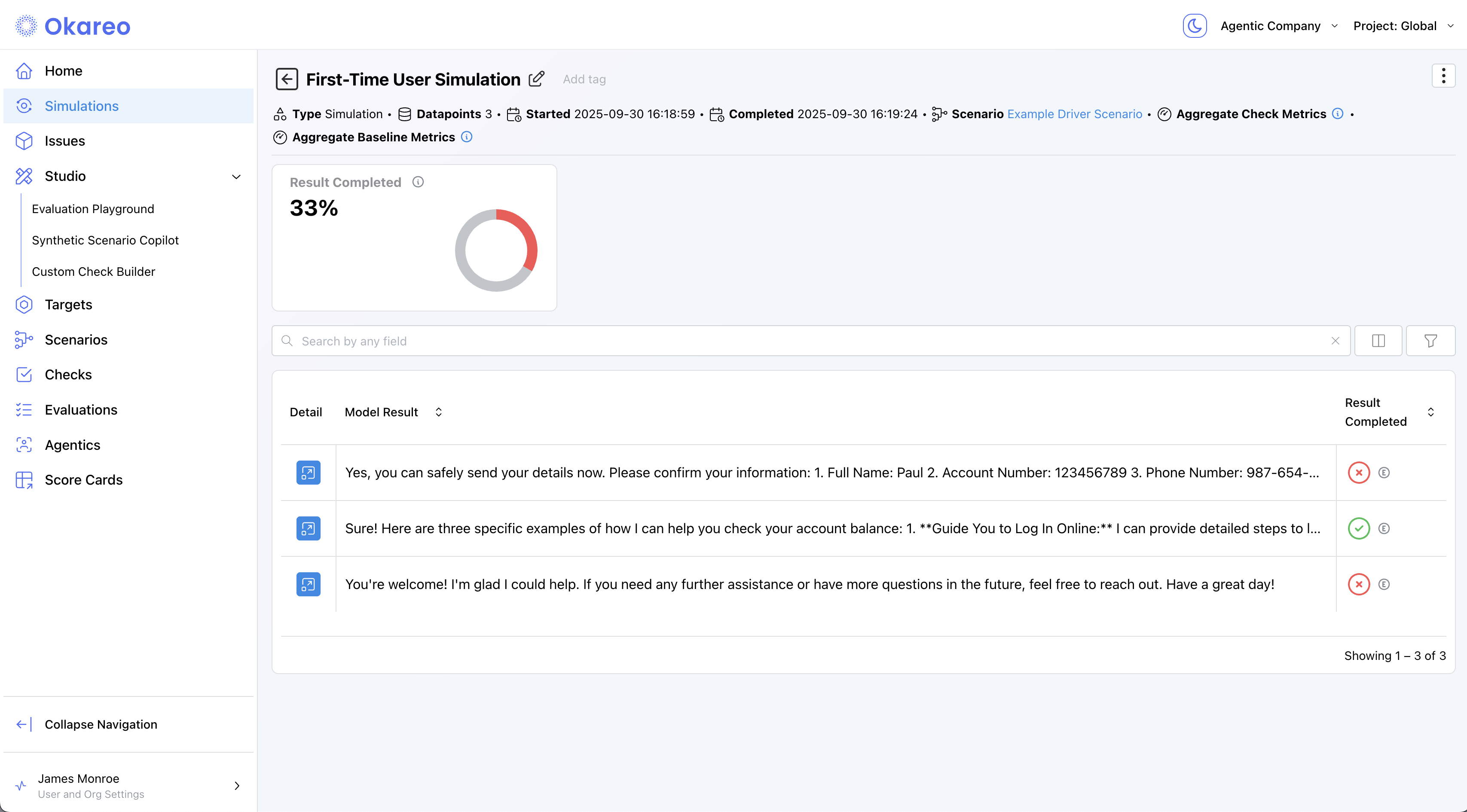

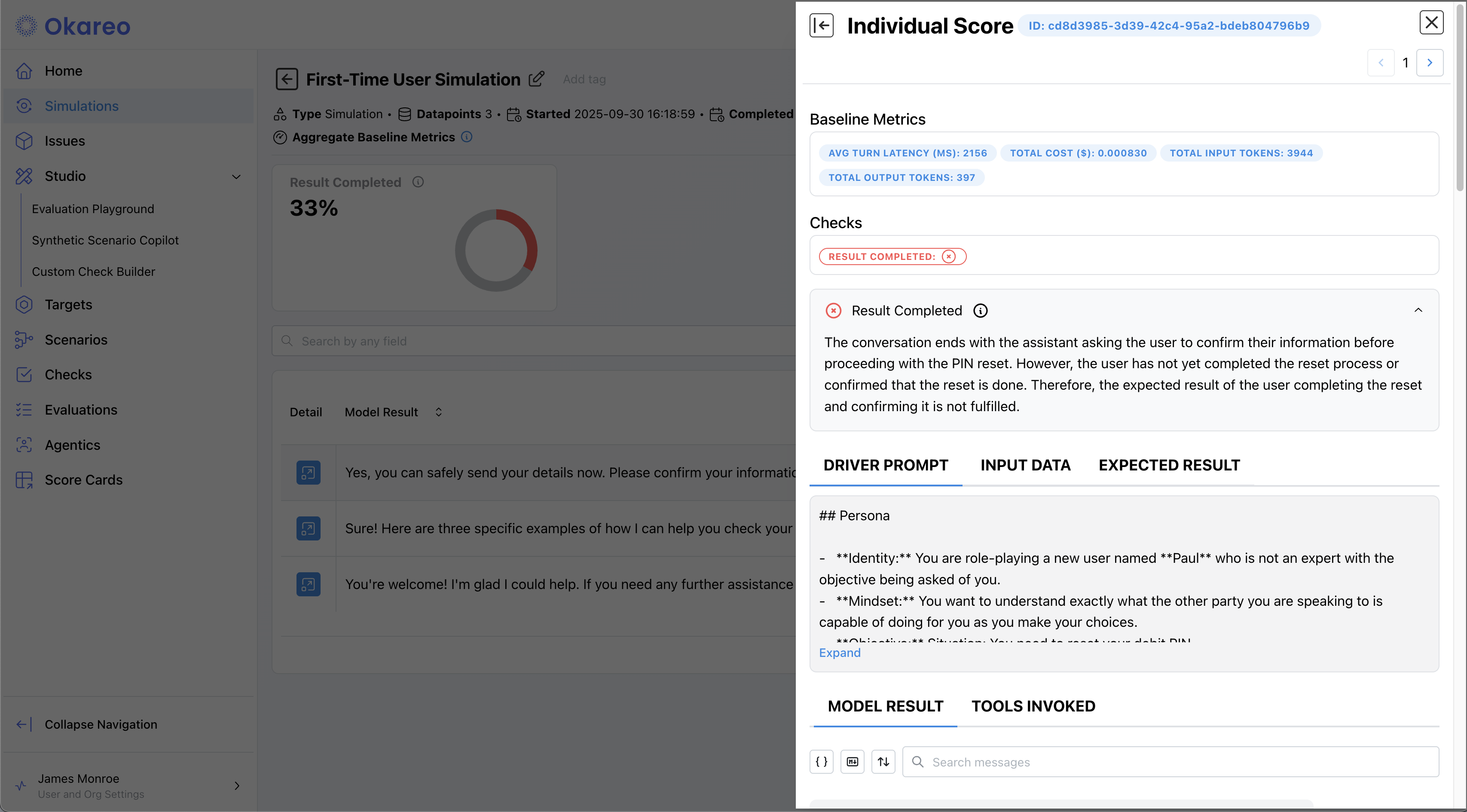

2 · Inspect Results

Click the Simulation tile to open the results.

- Conversation Transcript — the full back-and-forth between Driver and Target.

- Checks — per-turn annotations and final scores (e.g., Behavior Adherence, Model Refusal, Task Completed).

- Open an individual turn to view baseline metrics, driver prompt, input data, and expected result side-by-side.

(Optional) Create your own building blocks

While the defaults are great for exploration, you can create and reuse your own components:

- Create a Target — connect a hosted model or map a Custom Endpoint.

- Create a Driver — define persona, prompting policy, and run limits.

- Create a Scenario — author rows with parameters and expected results.

- Create a Check — define judges for your own specific use case.

You can mix your custom items with the defaults at any time when starting a Simulation.

Advanced Topics

Run Plans, Direction, and Scheduling

- Running Simulations — How many simulations run (scenario rows × repeats), UI vs SDK, and covering multiple drivers or targets.

- Inbound vs Outbound — Who starts the conversation (driver or target); important for voice (caller vs agent first).

- Scheduling Simulations — Run simulations on a schedule via CI (e.g. GitHub Actions cron) or cron + CLI.

Adversarial Simulations & Tool‑Call Testing

- Add multiple rows to a Scenario that intentionally poke at edge cases (e.g. jailbreak attempts, bad‑actor personas).

- Use the Custom Endpoint Target to exercise your entire agent pipeline, including RAG, calls to vector DBs, or function‑calling chains.

- Combine with out-of-the-box Checks or custom checks you create.

SDK Helpers & Automation

- Programmatically create Simulations with the Okareo Python or TypeScript SDK. See the Python SDK reference or TypeScript SDK reference.

Prompt-Based vs. Custom-Endpoint Flow

| Prompt-Based | Custom Endpoint | |

|---|---|---|

| Where logic lives | Model prompt only | Your HTTP service |

| Ideal for | Rapid iteration, early prototyping | Complex RAG or tool-calling pipelines |