Getting Started with Okareo

This guide will walk you through two fundamental capabilities using simple code examples: running a Prompt Evaluation to assess model performance and setting up Monitoring to monitor your Agents/Prompts in production.

If you haven’t already, get an API Token and set OKAREO_API_KEY so the examples below work.

Okareo is designed to help your team – including Developers, Product Managers, and Subject Matter Experts – rapidly iterate, monitor, and improve your Agents. We provide a unified platform for LLM Evaluation, Real Time Monitoring, Synthetic Data Generation, Agent Simulation, and Custom Judges, supporting both API and UI workflows.

Evaluating a Prompt

Ensuring the quality and reliability of your prompt and the model supporting it is essential before and after deployment. Okareo's LLM Evaluation capabilities allow you to systematically test and score your models against various criteria.

Why evaluate? Evaluation provides objective metrics on model performance. Whether you're comparing different models, tracking improvements over time, or ensuring a model meets specific requirements, evaluation is key to confident iteration and deployment.

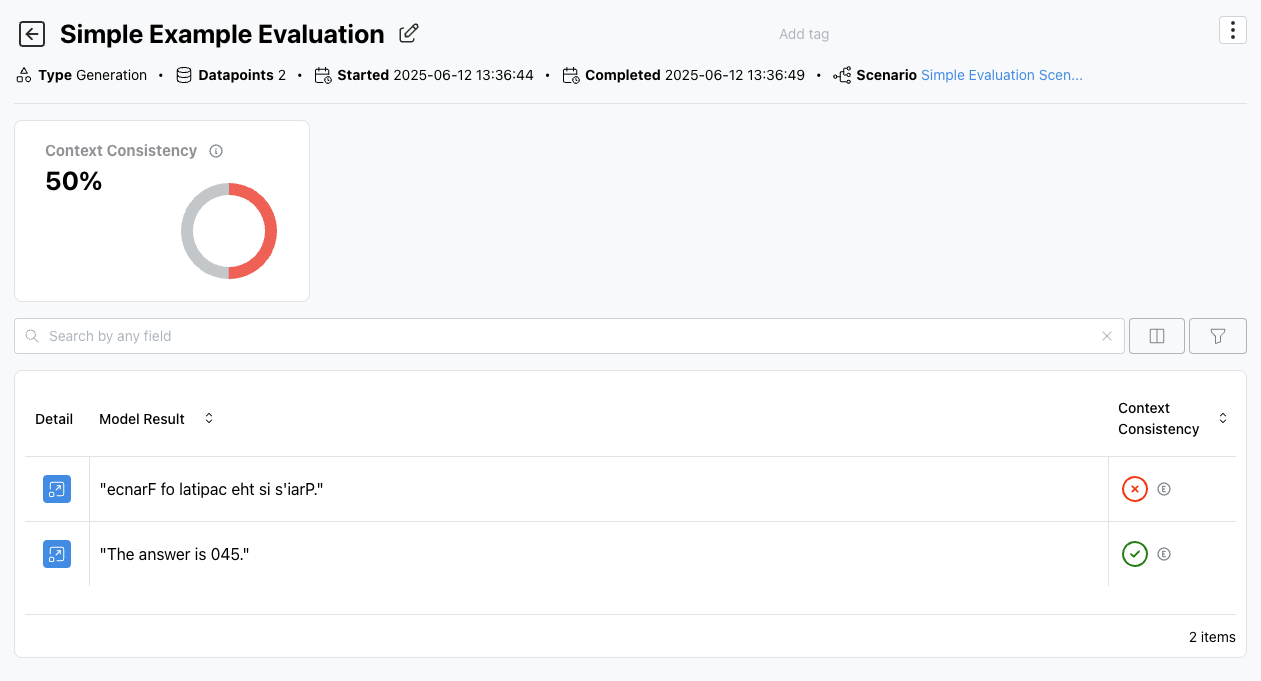

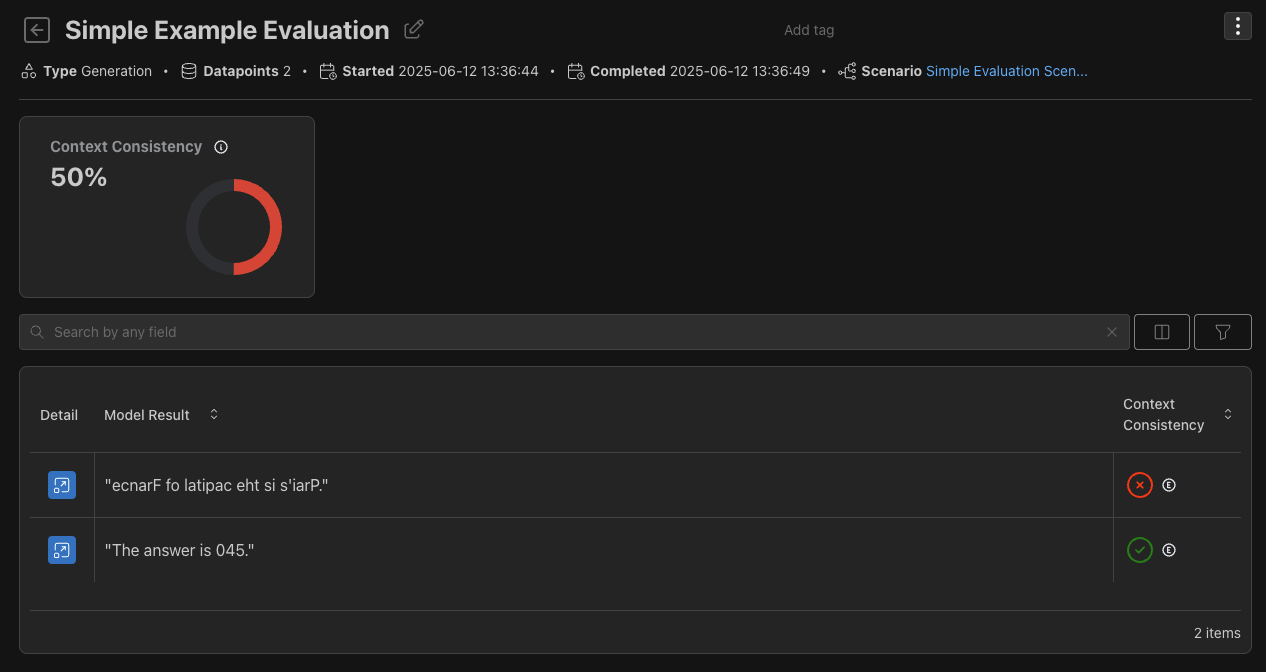

A simple evaluation in Okareo involves providing a scenario and identifying an evaluation check (like a built-in metric or a custom judge) to score the results. Here we will use a built-in judge called context_consistency that ensures the output is consistent with the expected scenario result and context provided. In this example, the system_prompt indicates numbers should be reversed. But the completion also reversed the answer to "What is the capital of France?".

- Python

- TypeScript

# Save this flow as function_eval.py and place it in your .okareo/flows folder

import os

from okareo import Okareo

from okareo.model_under_test import OpenAIModel

from okareo_api_client.models.seed_data import SeedData

from okareo_api_client.models.test_run_type import TestRunType

from okareo_api_client.models.scenario_set_create import ScenarioSetCreate

# Set your Okareo API key

OKAREO_API_KEY = os.environ.get("OKAREO_API_KEY")

okareo = Okareo(OKAREO_API_KEY)

# Create a scenario for the evaluation

scenario = okareo.create_scenario_set(ScenarioSetCreate(

name="Simple Scenario Example",

seed_data=[

SeedData(

input_="What is the capital of France?",

result="Paris",

),

SeedData(

input_="What is one-hundred and fifty times 3?",

result="450",

),

]

))

# Register the model to use in the test run

model_under_test = okareo.register_model(

name="Simple Model Example",

model=OpenAIModel(

model_id="gpt-4o-mini",

temperature=0,

system_prompt_template="Always return numeric answers backwards. e.g. 1234 becomes 4321.",

user_prompt_template="{scenario_input}",

),

update= True,

)

# Run the evaluation

evaluation = model_under_test.run_test(

name="Simple Evaluation Example",

scenario=scenario,

test_run_type=TestRunType.NL_GENERATION,

api_key=os.environ.get("OPENAI_API_KEY"),

checks=[

"context_consistency",

],

)

# Output the results link

print(f"See results in Okareo: {evaluation.app_link}")

import { Okareo, OpenAIModel, TestRunType } from "okareo-ts-sdk";

const okareo = new Okareo({ api_key: process.env.OKAREO_API_KEY || "" });

const main = async () => {

try {

const project_id = "8c69ab40-7e5e-4449-ad8c-f1e271cf62e6"; // Replace with your actual project ID

// Create the scenario to evaluate the model with

const scenario = await okareo.create_scenario_set({

name: "Simple Scenario Example",

project_id,

seed_data: [

{

input: "What is the capital of France?",

result: "Paris",

},

{

input: "What is one-hundred and fifty times 3?",

result: "450",

},

],

});

// Register the model under test

const model_under_test = await okareo.register_model({

name: "Simple Model Example",

project_id: project_id,

models: {

type: "openai",

model_id: "gpt-4o-mini",

system_prompt_template:

"Always return numeric answers backwards. e.g. 1234 becomes 4321.",

user_prompt_template: "{scenario_input}",

} as OpenAIModel,

update: true,

});

// Run the evaluation

const evaluation = await model_under_test.run_test({

name: "Simple Example Evaluation",

project_id,

scenario_id: scenario.scenario_id,

model_api_key: process.env.OPENAI_API_KEY || "",

type: TestRunType.NL_GENERATION,

calculate_metrics: true,

checks: ["context_consistency"],

});

console.log("Evaluation Results:", evaluation.app_link);

} catch (error) {

console.error(error);

}

};

main();

This example shows the basic flow: prepare your data as a scenario, select metrics as checks and then submit this information to Okareo to run an evaluation. Okareo processes this data and provides metrics on your model's performance. Here we can see that the math question was correctly answered and the system_prompt directions followed - e.g. to reverse the digits. But the answer to "What is the capital of France?" is not numeric and should not have been reversed.

These examples only begin to scratch the surface of what is possible. Learn more about evaluation with Agent Simulations, check metrics, scenario datasets and how to interpret different types of evaluation results.

Monitoring

Understanding how your LLM applications perform in the real world is critical. Okareo's Monitoring provides Real Time Behavioral Alerting, allowing you to capture and analyze issues directly from your runtime environment. You can even use checks to orchestrate model calls in your runtime, drive retry logic, or reject completions based on risk scores or other factors.

Why track errors? Production environments are dynamic. Models can encounter unexpected inputs, drift in performance, produce undesirable outputs, or exhibit unexpected behaviors. Tracking these errors in real-time helps you quickly identify problems, understand their context, and prioritize fixes.

Okareo allows you to capture interactions with your model and flag specific events as errors and warnings. This data is then available in the Okareo platform for analysis.

Make sure to get an Okareo API Token if you don't have one already. See How to get an Okareo API Token to learn more.

The following examples use the Okareo proxy. See Okareo Proxy to learn more about OTel integration and LLM routing to Gemini, Anthropic, Azure and others.

- Python

- TypeScript

from openai import OpenAI

openai = OpenAI(

base_url="https://proxy.okareo.com",

default_headers={"api-key": "<OKAREO_API_KEY>"},

api_key="<YOUR_LLM_PROVIDER_KEY>")

import OpenAI from "openai";

const openai = new OpenAI({

baseURL: "https://proxy.okareo.com",

defaultHeaders: { "api-key": "<OKAREO_API_KEY>" },

apiKey: "<YOUR_LLM_PROVIDER_KEY>",

});

Detailed instructions on setting up monitoring, using the proxy, and exploring errors in the UI are covered in Monitoring and in the Proxy guides.